Embodied Vehicle Navigation

This project is concerned with the development of embodied cognitive architectures that are applicable to the task of autonomous vehicle navigation.

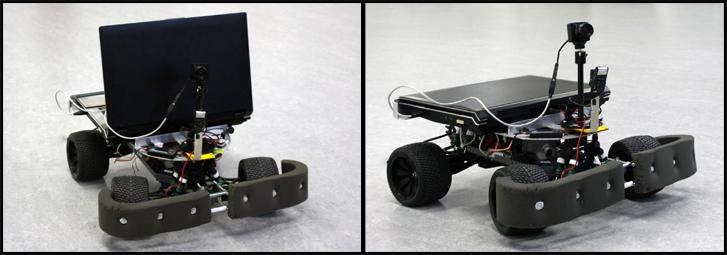

This work focuses on camera equiped robotic systems that learn how to interact with and interpret the world. The work incorporates novel Computer Vision, Machine Learning and Data Mining algorithms in order to learn to navigate and discover important visual entities. This is achieved within a Learning from Demonstration (LfD) framework, where policies are derived from example state-to-action mappings. For autonomous navigation, a mappings are learnt from holistic image features (GIST) onto control parameters using Random Forest regression. Additionally, visual entities (road signs e.g. STOP sign) that are strongly associated to autonomously discovered modes of action (e.g. stopping behaviour) are discovered through a novel Percept-Action Mining methodology. The resulting sign detector is learnt without any supervision (no image labeling or bounding box annotations are used). The complete system is demonstrated on a fully autonomous robotic platform, featuring a single camera mounted on a standard remote control car. The robot carries a PC laptop, that performs all the processing on board and in real-time.

Publications:

Autonomous navigation and sign detector learning

- In

Winter Vision Meetings, IEEE Workshop on Robot Vision

, 2013.

WoRV2013

at

Winter Vision Meetings 2013

Affordance Mining: Forming Perception Through Action - In Proc Asian Comference Computer Vision, LNCS 6492-6495, volume 4, pages 2620-2633, 2010. ACCV 2010

Robotics Platform:

CVL's mobile robot:

- RC car with mounted laptop, camera and webcam

- 4 core Intel Core i7 CPU + Nvidia 480M GPU

- Point Grey USB camera

Results:

Learning path following, obstacle avoidance and sign detection ( [

Ellis et al WoRV 2013

], [Hedborg et al WoRV 2013] ):

Robotdog: A Person Following Robot( [ Robotdog CDIO project ] ):

Vehicle following by percept-action affordance mining ( [ Ellis et al ACCV 2010 ] ):

Evaluating autonomous navigation - reconfigurable track:

Related projects:

Contacts:

Liam Ellis:

http://users.isy.liu.se/cvl/liam/

Last updated: 2022-01-20

LiU startsida

LiU startsida