Beyond Correlation Filters: Learning Continuous Convolution Operators for Visual Tracking

Accepted as oral presentation at ECCV 2016 (1.8% acceptance rate).

Top rank at the Visual Object Tracking challenge 2016.

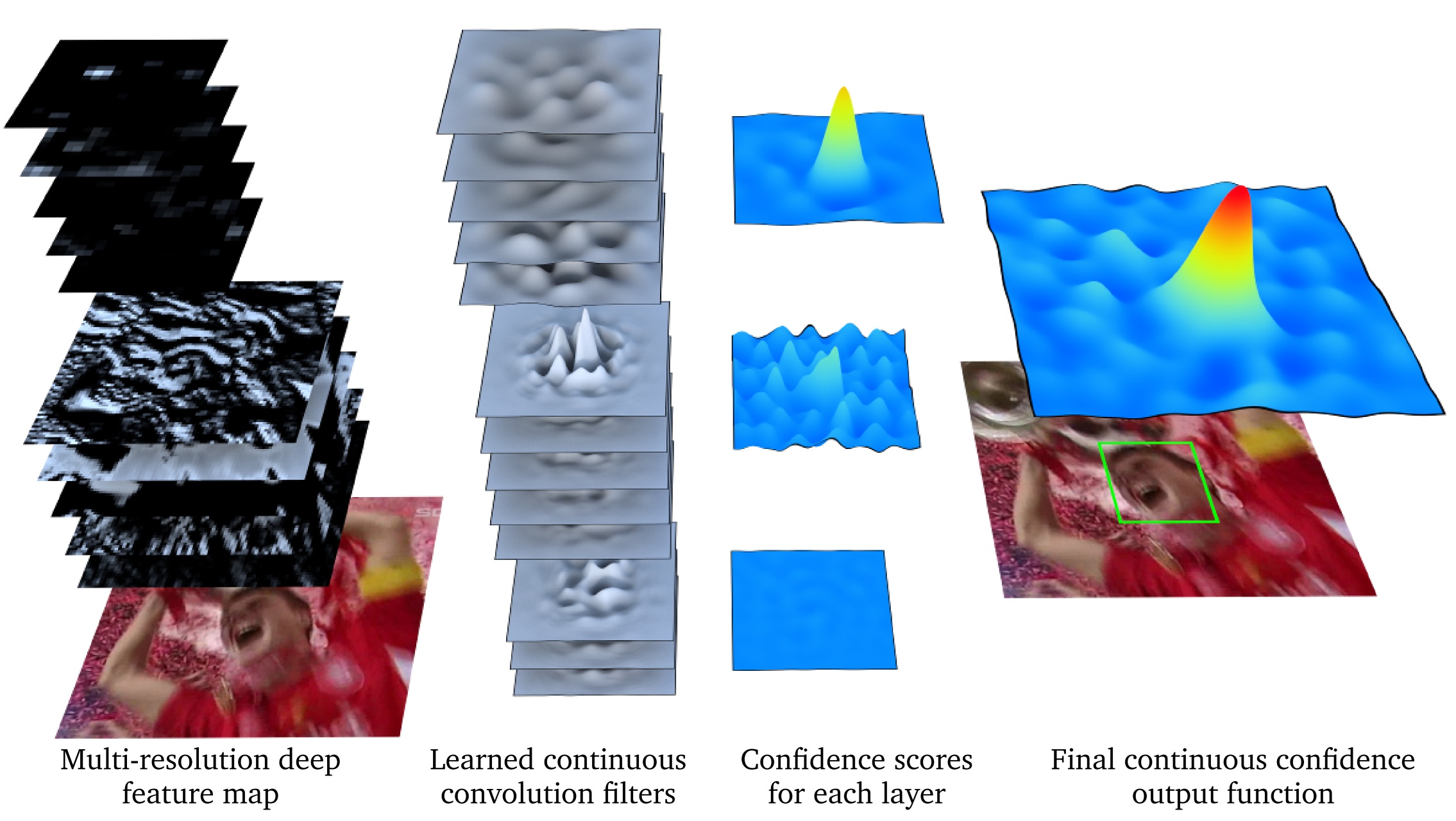

Discriminative Correlation Filters (DCF) have demonstrated excellent performance for visual object tracking. The key to their success is the ability to efficiently exploit available negative data by including all shifted versions of a training sample. However, the underlying DCF formulation is restricted to single-resolution feature maps, significantly limiting its potential.

In this work, we go beyond the conventional DCF framework and introduce a novel formulation for training continuous convolution filters. We employ an implicit interpolation model to pose the learning problem in the continuous spatial domain. Our proposed formulation enables efficient integration of multi-resolution deep feature maps, leading to superior results on three object tracking benchmarks: OTB-2015 (+5.1% in mean OP), Temple-Color (+4.6% in mean OP), and VOT2015 (20% relative reduction in failure rate).

Additionally, our approach is capable of sub-pixel localization, crucial for the task of accurate feature point tracking. We also demonstrate the effectiveness of our learning formulation in extensive feature point tracking experiments.

Publication

Martin Danelljan, Andreas Robinson, Fahad Khan, Michael Felsberg.

Beyond Correlation Filters: Learning Continuous Convolution Operators for Visual Tracking.

In Proceedings of the European Conference on Computer Vision (ECCV), 2016.

Supplementary material

Poster

VOT2016 Presentation slides

Code

Raw Results

Raw result files for the OTB, Temple-Color, VOT2015 and VOT2016 datasets.

LiU Homepage

LiU Homepage